The world of artificial intelligence is buzzing with excitement once again, thanks to Mistral AI’s latest release: Mistral Small 3.1. Announced on March 17, 2025, this open-source, multimodal language model is making waves as a lightweight yet powerful contender in the AI ecosystem. With a promise to outperform models like Gemma 3 and GPT-4o Mini, Mistral Small 3.1 is designed to deliver top-tier performance without the hefty computational demands of larger models. Let’s dive into what makes this release so special and why it’s capturing the attention of developers, businesses, and AI enthusiasts alike.

What sets Mistral Small 3.1 apart is its focus on efficiency. Despite its relatively modest size, it competes with models three times larger, delivering lightning-fast inference speeds of 150 tokens per second and a massive 128k token context window. It’s also lightweight enough to run on consumer-grade hardware like a single NVIDIA RTX 4090 or a MacBook with 32GB of RAM (when quantized). This makes it an accessible yet powerful tool for a wide range of users.

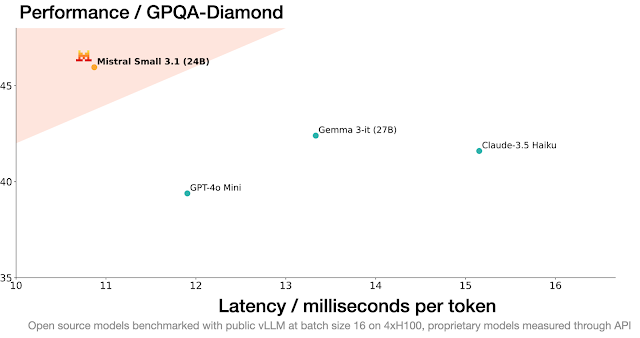

For instance, in the MMLU benchmark (Massive Multitask Language Understanding), Mistral Small 3 scored an impressive 81%, showcasing its prowess across diverse subjects like humanities, sciences, and math. In GPQA (General Purpose Question Answering), it surpassed GPT-4o Mini and Gemma 3, proving its strength in reasoning and general knowledge tasks. Add its multimodal capabilities into the mix, and you’ve got a model that punches well above its weight class.

For local use, tools like vLLM or Unsloth can help you run and fine-tune the model efficiently. Just ensure you’ve got at least 18GB of VRAM (or more for unquantized versions) if you’re running it on a GPU.

What do you think of Mistral Small 3.1? Are you planning to give it a spin for your next project? Let me know in the comments—I’d love to hear your thoughts!

What is Mistral Small 3.1?

Mistral Small 3.1 is the latest iteration in Mistral AI’s lineup of efficient, high-performing language models. Building on the foundation of its predecessor, Mistral Small 3 (released in January 2025), this 24-billion-parameter model introduces significant upgrades, including multimodal capabilities and an expanded context window. Released under the permissive Apache 2.0 license, it’s free for anyone to use, modify, and deploy—whether for personal projects or commercial applications.What sets Mistral Small 3.1 apart is its focus on efficiency. Despite its relatively modest size, it competes with models three times larger, delivering lightning-fast inference speeds of 150 tokens per second and a massive 128k token context window. It’s also lightweight enough to run on consumer-grade hardware like a single NVIDIA RTX 4090 or a MacBook with 32GB of RAM (when quantized). This makes it an accessible yet powerful tool for a wide range of users.

Key Features of Mistral Small 3.1

-

Multimodal Capabilities

Unlike its earlier text-only counterparts, Mistral Small 3.1 can process both text and images. This opens up a world of possibilities, from analyzing visual content to providing insights based on combined text-image inputs. Imagine a virtual assistant that can read a document, interpret a chart, and answer questions—all in one go. -

Multilingual Support

The model supports dozens of languages, including English, French, German, Spanish, Italian, Chinese, Japanese, Korean, Hindi, Arabic, and more. This makes it a versatile choice for global applications, from customer support to content creation. -

Expanded Context Window

With a 128k token context window (up from 32k in Mistral Small 3), Mistral Small 3.1 can handle much longer conversations and documents without losing track of the context. Whether you’re summarizing a lengthy report or engaging in an extended chat, this model has you covered. - Lightning-Fast Performance

Optimized for low latency, Mistral Small 3.1 clocks in at 150 tokens per second—faster than many competitors in its class. Its architecture, with fewer layers than rival models, ensures rapid processing without sacrificing accuracy. -

Agent-Centric Design

The model excels at function calling and JSON outputting, making it ideal for automated workflows and agent-based systems. Developers can integrate it seamlessly into applications requiring quick decision-making or structured outputs. -

Open-Source Freedom

Released under Apache 2.0, Mistral Small 3.1 is fully open-source. This means you can fine-tune it, customize it, or even build entirely new models on top of it—without restrictive licensing barriers.

How Does It Stack Up?

Mistral AI claims that Small 3.1 outperforms comparable models like Google’s Gemma 3 and OpenAI’s GPT-4o Mini across multiple benchmarks, including text comprehension, multilingual tasks, and long-context retention. It even holds its own against larger models like Llama 3.3 70B, all while using fewer computational resources.For instance, in the MMLU benchmark (Massive Multitask Language Understanding), Mistral Small 3 scored an impressive 81%, showcasing its prowess across diverse subjects like humanities, sciences, and math. In GPQA (General Purpose Question Answering), it surpassed GPT-4o Mini and Gemma 3, proving its strength in reasoning and general knowledge tasks. Add its multimodal capabilities into the mix, and you’ve got a model that punches well above its weight class.

Why No Synthetic Data?

Interestingly, Mistral Small 3.1 (like its predecessor) avoids synthetic data in its training pipeline. Synthetic data—artificially generated text often created by other AI models—has become common in LLM training, but it can introduce biases or an “echo chamber” effect, where models reinforce each other’s limitations. By sticking to real-world data, Mistral ensures Small 3.1 remains a solid foundation for further customization, especially for reasoning-focused applications. This choice has sparked debate in the AI community, with some praising the purity of its training process and others noting the scalability benefits synthetic data can offer.Use Cases: Where Mistral Small 3.1 Shines

Mistral Small 3.1’s blend of speed, efficiency, and versatility makes it a Swiss Army knife for AI applications. Here are some standout use cases:-

Fast-Response Conversational AI

Need a chatbot that responds instantly? Small 3.1’s low latency and instruction-following capabilities make it perfect for virtual assistants, customer service bots, and real-time support systems. -

Multimodal Workflows

From document verification to image-based diagnostics, the model’s ability to handle text and images together is a game-changer for industries like healthcare, finance, and customer support. -

Fine-Tuned Expertise

Developers can fine-tune Small 3.1 for specific domains—think legal advice, medical triaging, or technical support—creating tailored, high-accuracy solutions without needing massive computational resources. -

Local Inference for Privacy

For hobbyists or organizations handling sensitive data, Small 3.1’s ability to run locally on modest hardware ensures privacy without relying on cloud-based processing. -

On-Device Applications

Its lightweight design makes it ideal for robotics, automotive systems, or edge devices where real-time voice commands and automation are critical.

How to Get Started

Ready to try Mistral Small 3.1? It’s available for download on Hugging Face (look for Mistral-Small-3.1-24B-Base-2503 and the instruction-tuned version, Instruct-2503). You can also test it via Mistral AI’s developer playground, La Plateforme, or integrate it through APIs on platforms like Google Cloud Vertex AI. NVIDIA NIM support is coming soon, promising even easier deployment.For local use, tools like vLLM or Unsloth can help you run and fine-tune the model efficiently. Just ensure you’ve got at least 18GB of VRAM (or more for unquantized versions) if you’re running it on a GPU.

The Bigger Picture

Mistral Small 3.1 isn’t just another model—it’s a statement. In an AI landscape dominated by massive, resource-hungry models, Mistral AI is proving that smaller can be smarter. By combining cutting-edge performance with accessibility, they’re democratizing AI for developers, businesses, and hobbyists alike. The open-source ethos, coupled with practical design choices like avoiding synthetic data, positions Small 3.1 as a foundation for innovation—whether that’s building reasoning-focused models or powering next-gen applications.As the AI race heats up, Mistral Small 3.1 stands out as a reminder: power doesn’t always come from size. Sometimes, it’s about efficiency, adaptability, and a little French ingenuity.

What do you think of Mistral Small 3.1? Are you planning to give it a spin for your next project? Let me know in the comments—I’d love to hear your thoughts!