Introduction

On March 12, 2025, Google unveiled Gemma 3, the latest chapter in its family of open, lightweight AI models. Built on the same research and technology as the Gemini 2.0 models, Gemma 3 promises to bring state-of-the-art performance to developers and researchers—without the need for massive computational resources. I’m excited to break down what makes Gemma 3 a game-changer in the AI landscape, from its multimodal capabilities to its accessibility on a single GPU or TPU. Let’s dive in!What is Gemma 3?

Gemma 3 is a collection of advanced, open-weight AI models designed for portability and efficiency. Available in four sizes—1 billion (1B), 4 billion (4B), 12 billion (12B), and 27 billion (27B) parameters—these models cater to a wide range of use cases, from mobile apps to high-performance workstations. Unlike its predecessors, Gemma 3 introduces multimodal capabilities, a massive context window, and enhanced performance that rivals larger models, all while staying lightweight enough to run on modest hardware.Google’s goal? To empower the AI community with tools that are powerful, flexible, and responsible. With over 100 million downloads of previous Gemma models and 60,000 community variants, Gemma 3 builds on this momentum with features users have been clamoring for.

Standout Features of Gemma 3

-

Multimodal Magic

The 4B, 12B, and 27B models can process both text and images (and even short videos), thanks to an integrated vision encoder based on SigLIP. From answering questions about pictures to generating narratives from visuals, Gemma 3 opens up new possibilities. The 1B model sticks to text-only, but it’s still a powerhouse in its own right. -

Massive Context Window

Need to analyze a long document or hold an extended conversation? The 1B model handles up to 32,000 tokens, while the larger models boast a whopping 128,000-token context window—perfect for tasks like legal analysis or deep research. -

Multilingual Mastery

Pretrained on over 140 languages and excelling in 35, Gemma 3 doubles the multilingual data of its predecessor, Gemma 2. A new tokenizer ensures robust support across diverse linguistic tasks, from translation to culturally nuanced responses. -

Single GPU/TPU Efficiency

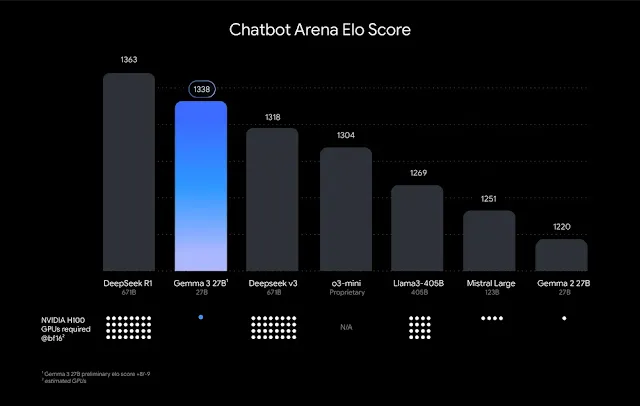

Google touts Gemma 3 as the “world’s best single-accelerator model.” The 27B version, trained on 14 trillion tokens, delivers top-tier performance on a single NVIDIA H100 GPU or TPU, outpacing competitors like Meta’s Llama-405B and OpenAI’s o3-mini in preliminary human preference tests on LMArena (scoring 1338!). -

Quantized for Speed

Official quantized versions shrink model size and computational needs without sacrificing accuracy, making Gemma 3 ideal for edge devices like phones and laptops. The 1B model, at just 529MB, can process a page of text in under a second. -

Safety and Customization

Alongside Gemma 3, Google launched ShieldGemma 2, a 4B-parameter image safety classifier that flags dangerous, explicit, or violent content.

Developers can fine-tune Gemma 3 using tools like JAX, PyTorch, or Keras, tailoring it to specific needs while keeping safety in check.

How It’s Made

Gemma 3’s development is a masterclass in optimization. Trained on Google’s TPUv5e hardware with the JAX framework, it leverages a mix of techniques:- Distillation from larger models for efficiency.

- Reinforcement Learning (RLHF, RLMF, RLEF) to boost math, coding, and instruction-following skills.

- Architectural Tweaks like QK-Norm instead of softcapping, sliding window attention (1024 tokens), and a wider MLP design for better reasoning.

Why Gemma 3 Matters

Gemma 3 isn’t just another AI model—it’s a shift toward decentralized, accessible intelligence. Posts on X highlight its edge-device optimization and fine-tuning potential, suggesting it could challenge giants like Llama or Qwen if Google nails the customization ecosystem. Its ability to run locally enhances privacy and cuts cloud costs, while its open-weight design invites innovation from the global developer community.For researchers, the Gemma 3 Academic program offers $10,000 in Google Cloud credits, and platforms like Hugging Face, Kaggle, and Google AI Studio make it easy to jump in. NVIDIA’s optimizations ensure it sings on everything from Jetson Nano to Blackwell GPUs.

Real-World Impact

Picture this: A student uses the 1B model on their phone to summarize a textbook offline. A developer fine-tunes the 12B model to generate game dialogue from in-game visuals. A researcher deploys the 27B model on a single GPU to analyze multilingual datasets. Gemma 3’s versatility shines across these scenarios, balancing power with practicality.Looking Forward

Gemma 3 sets a new bar for open AI models, but it’s not without debate. The “open” label comes with licensing restrictions, sparking discussion about true openness. Still, its performance—especially the 27B model’s LMArena dominance—signals Google’s intent to lead in compact, capable AI. Future iterations might refine fine-tuning toolchains or expand multimodal inputs further.Conclusion

Gemma 3 is more than an upgrade—it’s a statement. By packing cutting-edge AI into a lightweight, single-accelerator package, Google is handing developers and researchers a tool to rethink what’s possible. Whether you’re building the next big app or probing the universe’s mysteries, Gemma 3 is ready to help.Learn More : https://ai.google.dev/gemma

Try it Out : https://aistudio.google.com/

So, what will you create with it?